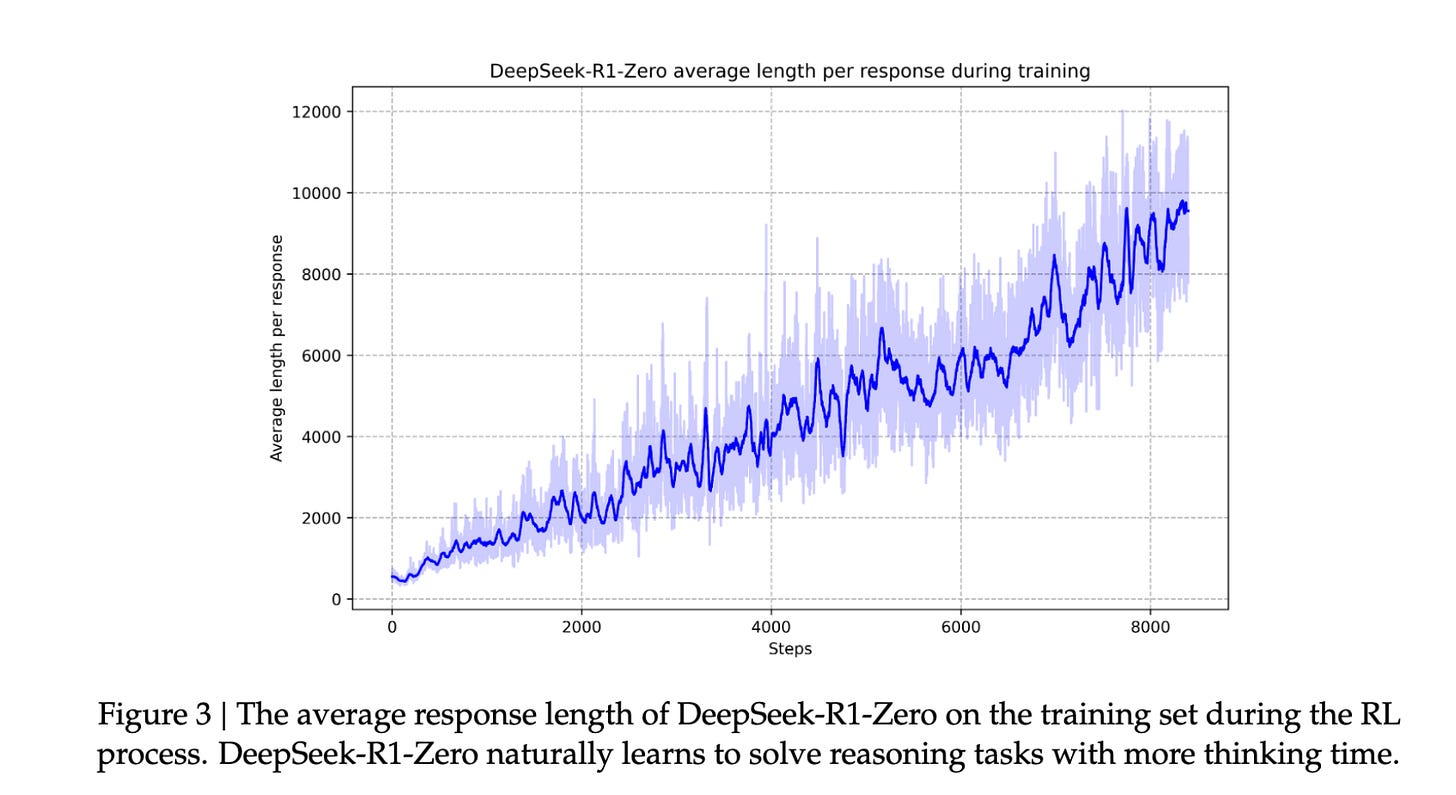

Advanced reasoning LLMs such as o1/3 and R1 demonstrate strong capabilities in various domains. However, their long reasoning chains lead to high latency, making them impractical for many real-world applications.

But do these models always require such complex reasoning chains? Can we control their depth and optimize the compute/quality tradeoff according to the product needs?

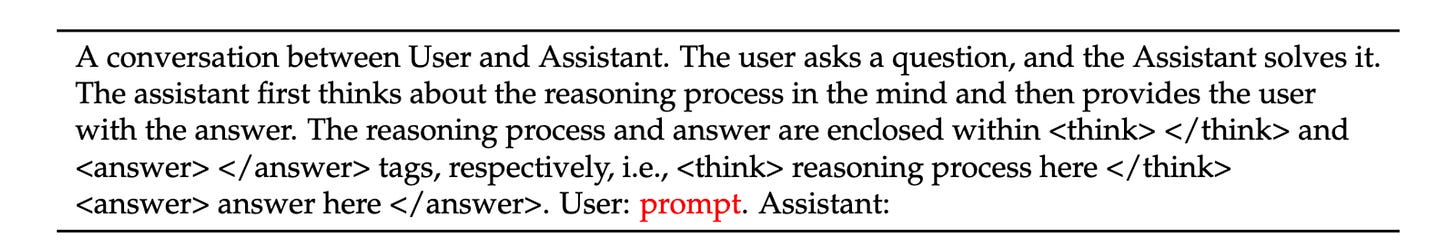

We experimented with different prompts to influence the number of reasoning tokens generated by the model. Our tests, conducted on both DeepSeek R1 and o1-mini, revealed that a simple suffix added to the original prompt effectively controls reasoning depth:

{original_prompt}

You must use exactly {complexity_level} reasoning sentences

At least in the case of R1, the term reasoning was part of the prompt, which explains the effectiveness of the technique.

It’s important to note that attempts to instruct the model to limit its reasoning steps directly were ineffective.

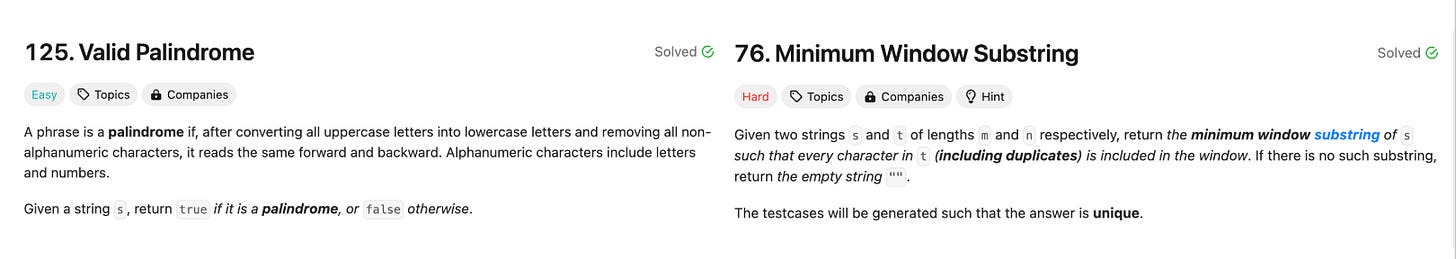

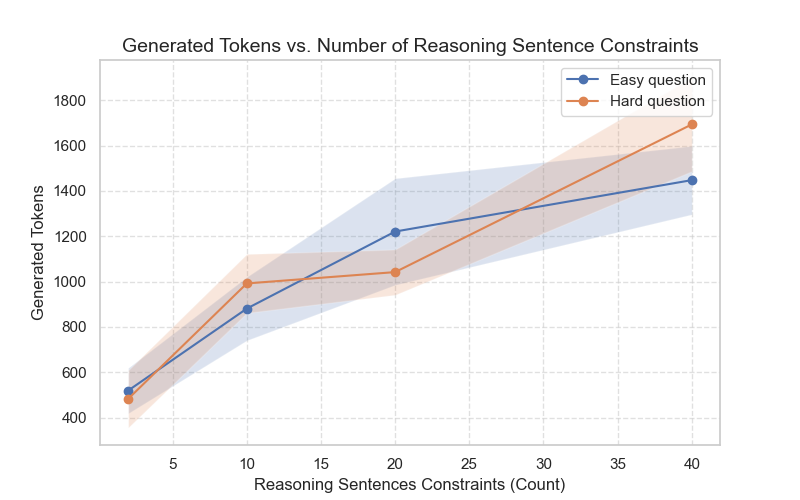

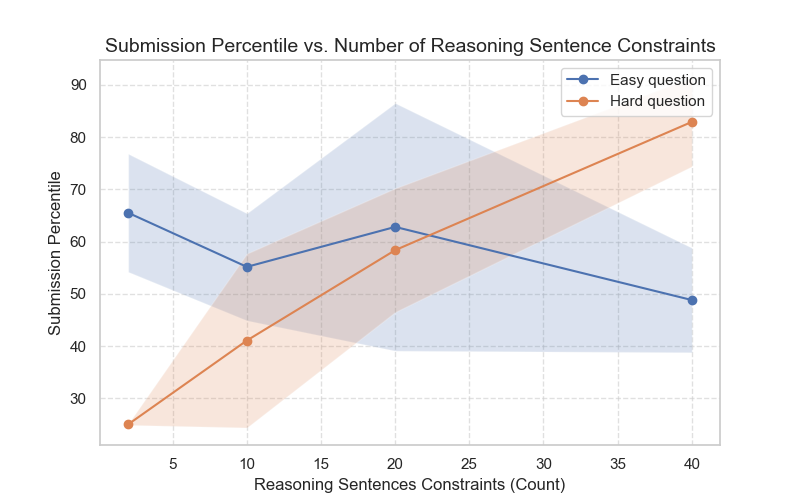

To test the tradeoff we selected two LeetCode problems: one that was ranked with Hard difficulty and the other was ranked as Easy.

The model was instructed to generate solutions with the best possible runtime, and we evaluated performance by submitting the generated code to LeetCode and analyzing its runtime percentile.

Using o1-mini, we tested different constraints on the number of reasoning sentences.

The results show that limiting reasoning steps via prompting is effective for both the easy and hard questions.

o1-mini consistently generated correct code that passed LeetCode’s acceptance tests. However, we also prompt the model to optimize running time. The runtime percentile revealed an interesting pattern:

Latency remains a significant challenge for deploying reasoning models in production. Our findings demonstrate that a simple prompting technique can effectively regulate reasoning complexity, offering a practical way to balance latency and quality.

While there is a tradeoff between reasoning depth and solution quality, our experiments show that beyond a certain point, longer reasoning chains offer diminishing returns. This highlights the need to optimize reasoning complexity per task. We hope that LLM providers will introduce more natural controls over the compute-quality tradeoff.